In this guest blog post, Ruslan Shcherbin, Senior Software Engineer from Inigo, shares his experience of exploring various database alternatives and eventually choosing ClickHouse paired with Materialized Views to handle their high-performance analytics needs.

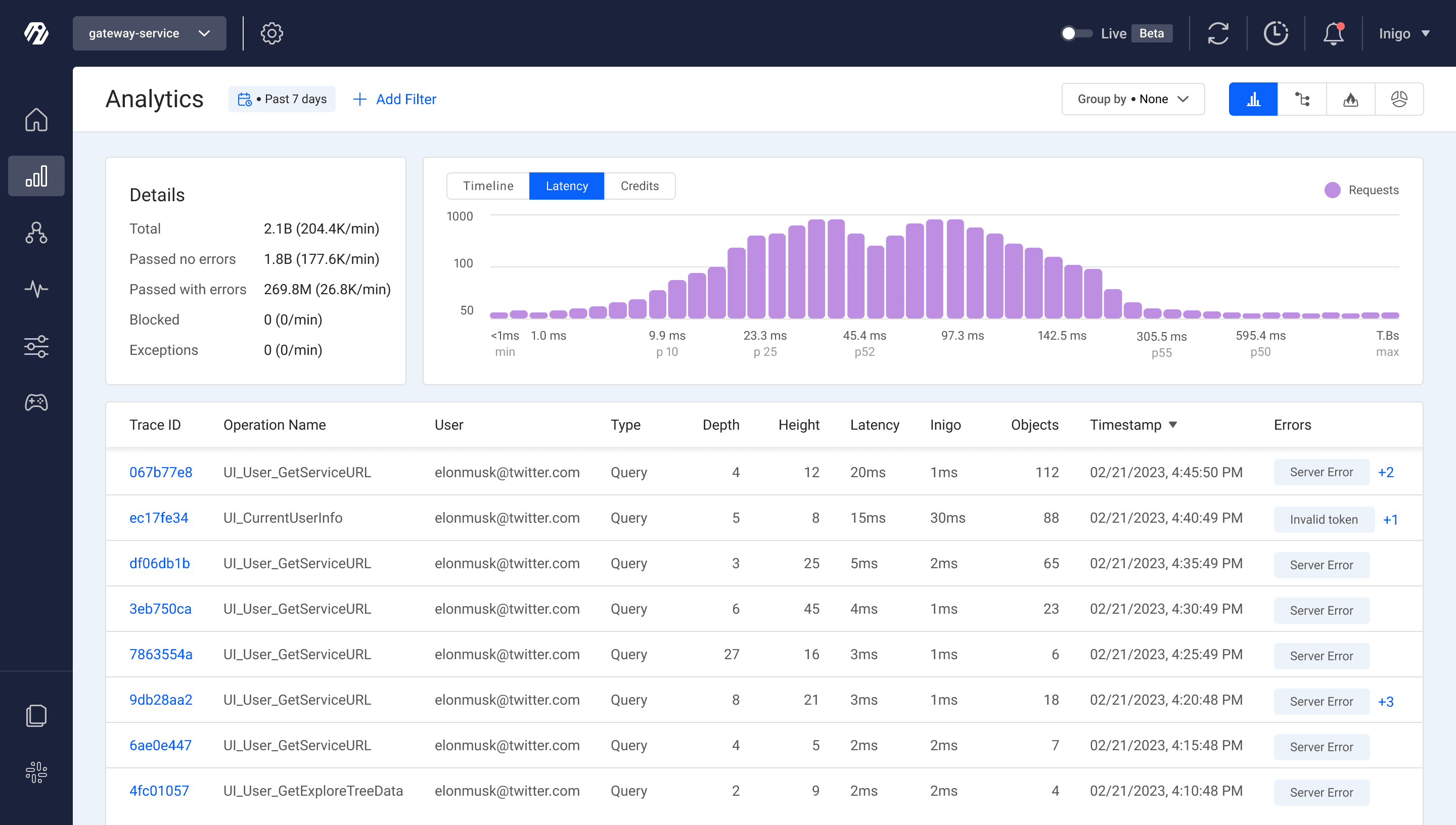

Inigo is a pioneering company in the GraphQL API management industry, specializing in comprehensive security and governance at scale. Their platform empowers API and platform teams to fully control, manage, and secure GraphQL deployments by providing immediate visibility, security, access control, compliance, and continuous delivery. In addition to its security features, Inigo offers key developer experience enhancements and workflow efficiencies, ultimately enabling organizations to expedite GraphQL API development, lower costs, and improve end-user experiences.

Overview

At Inigo, we were seeking a database solution that could handle a high volume of raw data for analytics. After exploring various alternatives, including SQLite, Snowflake and PostgreSQL, we decided to experiment with Materialized Views using ClickHouse on a relatively slow machine. In this post, we share our findings after loading billions of rows of data and conducting measurements.

We process and analyze billions of GraphQL API requests at Inigo. Live incoming API data allows us to create aggregated views on high cardinality data, generate alerts, and create dashboards based on extensive data.

Database Alternatives for Large-Scale Analytics

Before arriving at ClickHouse, we tried several other databases:

- Snowflake - too slow and costly for our needs. While it performs well for processing in-house data, it becomes quite expensive when handling real-time customer data within a product, which negatively impacts the product's unit economics. The lack of a local Snowflake emulator further complicates development, as it prevents running continuous unit tests against the database. Additionally, the user interface did not align with our preferred database usage due to the Snowflake UI dashboard being more geared toward queries on a single table. Since each service had its own separate table, we had to obtain and hardcode table names in the UI. This made it impossible to retrieve data from all tables using a single query in the user interface.

- PostgreSQL - an excellent transactional database, but unsuitable for large analytic workloads. We were able to get it to work with around 100K rows, but past that, the indexes were growing out of control and the cost of running a PostgreSQL cluster didn’t make sense. There was significant performance degradation once we hit the 100K - 1M rows mark. However, we acknowledge that this comparison might be unfair, given that PostgreSQL's OLTP nature is not ideally suited for this use case.

Why ClickHouse?

Our choice fell on ClickHouse for several reasons. ClickHouse is a columnar database specifically designed to handle high volumes of data while providing fast query execution. Materialized views in ClickHouse allow datasets to be pre-aggregated which can significantly improve the performance of analytical queries. Furthermore, ClickHouse's ability to automatically manage and update materialized views as new data is ingested simplifies the maintenance process, making it an attractive choice for those seeking an efficient and powerful analytical database solution.

The open-source nature of ClickHouse and the ability to run it as a simple binary or docker on our laptops were major attractions. The convenience of local testing, combined with its scalability, convinced us that ClickHouse was the ideal choice for our needs.

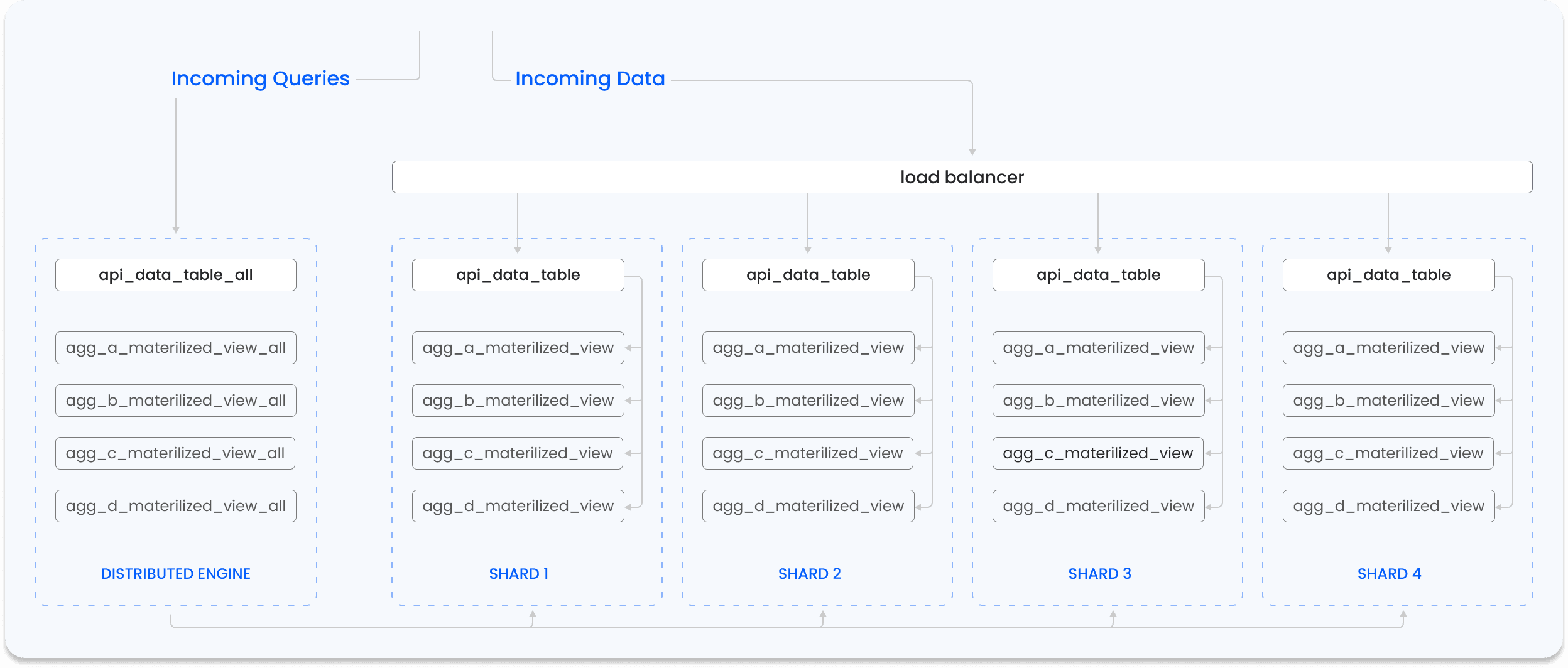

Inigo’s ClickHouse Setup

Our current ClickHouse setup is a single database where nearly every Inigo service has its own table, with some tables containing billions of rows of data. It runs on 4 vCPUs, 32GB RAM for the development environment and 16 vCPUs, 128GB RAM for the production environment.

Setting up ClickHouse was challenging, as understanding the nuances of each index, sharding, and materialized views required considerable effort. However, the end result has been a system capable of loading and querying vast amounts of analytics data in a scalable manner, both in terms of hardware cost and engineering development time.

Materialized Views in ClickHouse

Given that we have billions of rows in some of our raw tables, we strive to minimize joins whenever possible. Instead, we have consolidated the data into several materialized views, each grouping the data into distinct time window buckets. As a result, most of the time, we are querying thousands of aggregated rows instead of millions of raw data rows, resulting in faster real-time filtering.

Creating Materialized View in ClickHouse

Our raw tables all contain a timestamp column observed_at.

We then aggregate the data rows into specified intervals for the materialized tables. For example, if the interval is 1 minute, we use the toStartOfInterval(observed_at, INTERVAL 1 minute) function to aggregate data into one minute intervals. Each row in the materialized views contains several raw data values, all corresponding to a particular minute based on the observed_at column.

By creating materialized views with different intervals, such as 6 minutes, 40 minutes, and 3 hours, we can further enhance query performance and enable more efficient data analysis across various timeframes.

We exclude all columns containing unique data, such as trace_id, from materialized views. When we need to filter by trace_id, we query the raw data. It is worth noting that raw data queries are reasonably quick in ClickHouse, as ClickHouse can efficiently sift through a large number of rows in real-time using the appropriate index for each column.

Grouping Data in ClickHouse

In our analytics, we primarily use GROUP BY queries to obtain data. While standard queries and materialized view queries use the same SQL syntax, there is a slight modification in the way they handle counting. Instead of using count() to count the rows, materialized view queries use sum(calls) with the derived column calls. The column calls is created by using the function count() aliased as calls in the materialized view definition. This change allows us to aggregate the count of rows from the materialized view, resulting in more efficient querying and better performance.

Understanding the Merge and State Suffix in ClickHouse

In ClickHouse, the -State and -Merge suffixes are used with aggregation functions to handle intermediate aggregation states and merge them efficiently. These suffixes are particularly useful when working with distributed databases or when we need to combine aggregated results from multiple sources.

The State suffix: The aggregation functions with the State suffix return an intermediate aggregation state rather than the final result. These intermediate states are stored in a compact binary format that represents the aggregation in progress.

For example, if we want to calculate the 95th (0.95) and 99th (0.99) percentiles of a column called server_process_time, we can use the quantilesState(value) function. This function returns the intermediate state of the quantiles calculation, which we can store in a separate table or combine with other intermediate states. We utilize these state functions in our materialized views, storing their output in summarized tables.

The Merge suffix: The aggregation functions with the Merge suffix take the intermediate aggregation states produced by their corresponding State functions and merge them to produce the final aggregation result. This is useful when we have multiple intermediate states that we need to combine into a single aggregated result.

For instance, to obtain the 99th percentile of the aggregated server_process_time, we would use quantileMerge(0.99)(quantiles_server_process_time), where quantiles_server_process_time is the intermediate state resulting from the quantilesState function used in the materialized view. We show a complete example below:

--- receiving table for the inserts

CREATE TABLE query_data

(

`observed_at` DateTime64(3),

`sidecar_process_time` Float64,

`server_process_time` Float64

)

ENGINE=MergeTree()

ORDER BY (observed_at);

CREATE MATERIALIZED VIEW query_data_mv

ENGINE = AggregatingMergeTree()

ORDER BY (observed_at)

AS SELECT

toDateTime64(toStartOfInterval(observed_at, INTERVAL 1 MINUTE), 3) as observed_at,

quantilesState(0.95, 0.99)(sidecar_process_time) as quantiles_sidecar_process_time,

quantilesState(0.95, 0.99)(server_process_time) as quantiles_server_process_time

FROM query_data

GROUP BY observed_at;

INSERT INTO query_data (observed_at, sidecar_process_time, server_process_time) VALUES (toDateTime('2023-06-15 19:09:01'), 0, 0), (toDateTime('2023-06-15 19:09:09'), 100, 1000) (toDateTime('2023-06-15 19:19:01'), 0, 0), (toDateTime('2023-06-15 19:19:09'), 100, 1000);

--we query the materialized views underlying table with merge functions

SELECT

observed_at,

quantileMerge(0.95)(quantiles_sidecar_process_time) as p95_sidecar_process_time,

quantileMerge(0.99)(quantiles_sidecar_process_time) as p99_sidecar_process_time,

quantileMerge(0.95)(quantiles_server_process_time) as p95_server_process_time,

quantileMerge(0.99)(quantiles_server_process_time) as p99_server_process_time

FROM query_data_mv

GROUP BY observed_at;

While we are still working on recreating large materialized views, we believe we're on the path to a promising solution.

Results and Benefits of Using ClickHouse and Materialized Views

- We utilize Materialized View queries in nearly all aspects of our app, with the exception of one table with Raw data and any queries that involve filtering by a unique field.

- Switching to use Raw data when necessary is straightforward. We employ the same SQL files in our queries, with minor modifications made for Materialized Views.

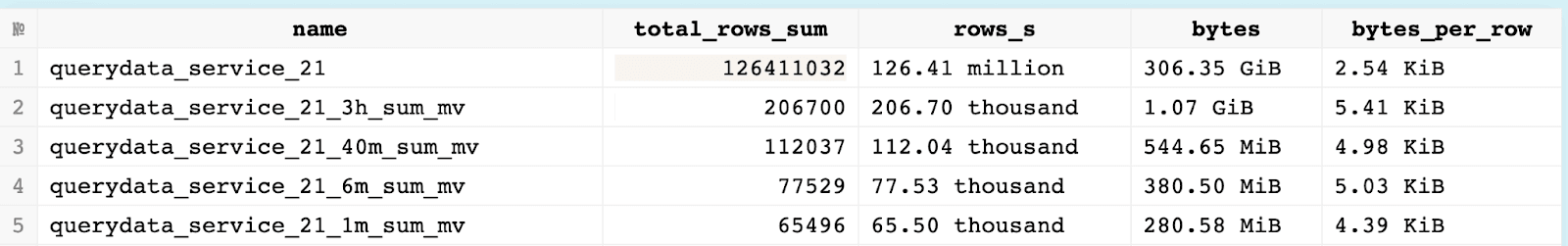

- There's a significant difference in data size. For instance, Raw data covers up to a week, while Materialized Views can accommodate up to a month's data:

- Less load on the database and faster operation of the entire App due to the smaller size of MV tables

- We can write migrations not only in SQL files, but also in Go, which is more convenient for us as we often migrate multiple tables simultaneously. Detailed example here.

- ClickHouse’s strong typing simplifies our migration logic which historically needed to allow for weaker typing guarantees in NoSQL solutions.

- The convenience of Test-Driven Development (TDD) is enhanced by the speed of our independent integration tests.

We've encountered no issues when using Materialized Views, both with standalone instances and within a clustered environment.

Performance Results of Using ClickHouse and Materialized Views

- The database is now faster than our Golang code, allowing us to use pooling and improving our UI responsiveness.

- Confidence in query speed, enables us to identify issues outside the database, such as in tracing extensions.

Using ClickHouse Cloud

The scalability of this solution is dependent on a variety of factors, one of the most important being the setup of the scaling architecture. ClickHouse offers a cloud service with 720 GiB RAM, 180 vCPU, and 10 TB of data, indicating its potential for handling significant workloads. We have been able to easily scale to 1 billion rows, and that number continues to grow.

Looking ahead, we are excited about the ClickHouse Cloud offering. Although we began using ClickHouse before this was available and devoted significant time to configuring and managing shards and disks, we anticipate moving some of our self-hosted workloads to the cloud.

Conclusion

ClickHouse has been an excellent choice for our large-scale analytics needs. Even though it has certain complexities, such as the absence of transactions, these are substantially overshadowed by its benefits. The speed, capacity to handle vast amounts of data, and features like Materialized Views make ClickHouse a robust tool for analytics. Overall, ClickHouse when paired with Materialized Views, has proven to be an impressive solution. We highly recommend it for organizations seeking a high-performance analytics solution.

Learn More

Visit: https://inigo.io/